Hi, my name is

Radha Gulhane.

Curious Mind

My areas of focus include Distributed Deep Learning, Natural Language Processing, and High-Performance Computing. I graduated from Ohio State University with a major in Computer Science & Engineering, where my research centered on Distributed DL.

About Me

Hello! I'm Radha, and I find great satisfaction in tackling intricate problems.

My focus is on distributed deep learning, which I have actively researched and contributed to as a graduate researcher at OSU's High-Performance Computing lab, NOWLAB .

At present, I work at Zoom AI team, focusing on multimodal reasoning and LLM training.

In my leisure time, I pursue my passion for portrait sketching and hiking.

Here are a few technologies I’ve been working with recently:

- Large Language Models

- Distributed Deep Learning

- Reinforcement Learning

- Reward Modeling for RL

Where I’ve Worked

Senior AI Software Engineer

Zoom Communications

May 2024 - Present

- Working on Reinforcement Learning to enhance the reasoning capabilities of Vision-Language Models (VLMs).

- Implemented novel reward modeling using a hybrid reward mechanism with support for both sparse and dense rewards for VLMs.

- Worked on enabling and performance tuning of inference engines to accelerate data synthesis efforts.

Some Things I’ve Built

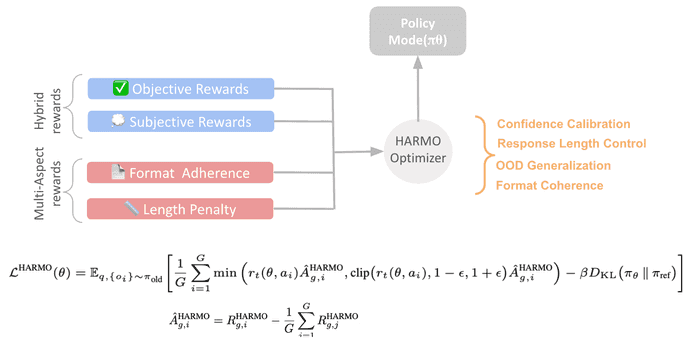

HARMO: HYBRID AND MULTI-ASPECT REWARD OPTIMIZATION

Is accuracy alone sufficient to guide reinforcement learning? How can we address non-verifiable, out-of-distribution, generalizable task associations along with a verifiable, confidence-aware scorer in policy optimization? We present a hybrid reward modeling framework that combines (i) model-based rewards, and (ii) rule-based rewards derived from domain-specific heuristics with confidence estimates. By moving beyond accuracy, we introduce multi-aspect rewards that stabilize training and further enhance reasoning ability.

https://arxiv.org/pdf/2510.05283- Multimodal reasoning

- Reinforcement learning

- Reward modeling

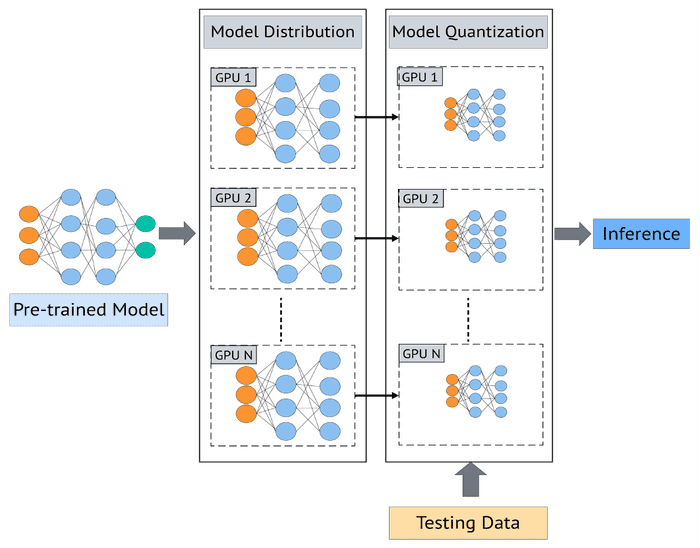

Inference for High-Res Images with Quantization and Distributed DL

InferHiRes facilitates the inference for very high-resolution images using the quantization and Distributed DL techniques. It supports half-precision and integer-only precision, providing a 6x speedup with INT8 quantization compared to FLOAT32 precision, with less than 1% accuracy degradation.

https://dl.acm.org/doi/10.1145/3626203.3670548- Quantization

- Deep Neural Networks

- PyTorch

- Python

- MPI

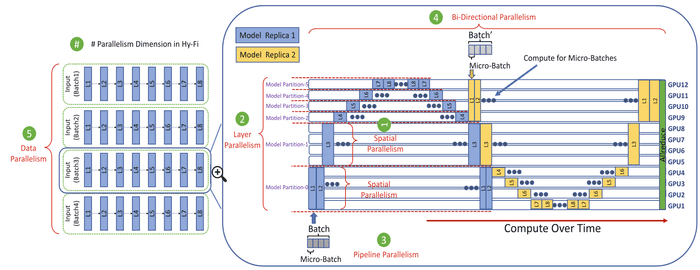

MPI4DL: Distributed Deep Learning for High Resolution Images

MPI4DL is a distributed, accelerated, and memory efficient training framework for very high-resolution images that integrates Spatial Parallelism, Bidirectional Parallelism, Layer Parallelism, and Pipeline Parallelism.

- Deep Neural Networks

- PyTorch

- Python

- MPI

Other Noteworthy Projects

view the archiveDistributed Object Storage for to CORTX : B+ tree

Provided CRUD operations support with asynchronous transcation execution for metadata storage of CORTX. Additionally, assited in memory limit feature and implemented various node formats for variable-sized objects with CRC (Cyclic redundancy check) support for data recovery.

Data Parallelism : Distributed Deep Learning

Implemented Data Parallelism using Horovod for PyTorch Distributed and PyTorch distributed for the ResNet model. Also, conducted performance evaluations for both weak and strong scaling by varying the number of nodes. The objective was to identify the optimal configuration that offers the best performance in terms of scalability and efficiency.

Pipeline Parallelism: Distributed Deep Learning

Implemented pipeline parallelism using DeepSpeed for the AlexNet and VGG19 models. Also, evaluated performance to analyze the performance trends of pipeline parallelism for both models, considering various numbers of GPU nodes.

Machine Learning Algorithms for Clustering

The objective is to apply clustering techniques to three distinct datasets : small_Xydf, large1_Xydf, large2_Xydf. Subsequently, evaluate the effectiveness of various clustering algorithms on these datasets and compare their performance to determine the most suitable algorithm.

Machine Learning Algorithms for Classification

Implement different classification models for Hotel Booking Dataset to develop effective analyses and models for predicting which future reservations are likely to be at risk of cancellation.

Metadata Object Storage

Object storage data stuctures widely used in File Systems and Databases (example, MongoDB indexes use a B-tree, SQL uses B+tree as a object stoarge). This project includes implemented B, B+ tree and B-episilon tree.

Education

Master of Science

Computer Science & Engineering

Ohio State University

August 2022 - May 2024

- GPA : 3.85 / 4

- Transcript

What’s Next?

Get In Touch

If you'd like to chat or are keen to dive into my experience, recent work, and interests, feel free to drop me an email.

Say Hello